... Ok, ok, I will elaborate. But first I feel the need to explain what we are talking about here, to anybody who does not have a Ph.D. in particle physics and is still reading this column.

Background: The Tevatron, CDF, and the W boson

The stage is set at the Tevatron collider, which until the advent of the Large Hadron Collider at CERN has kept for twenty-five years the record for highest-energy collisions. It operated from 1985 to 2011, and delivered proton-antiproton collisions to two large multi-purpose experiments, CDF and DZERO. The latter used the data to discover the sixth and most massive quark, the top, and to measure its properties; but along with that, the experimental collaborations produced a large number of exquisite physics measurements, the discovery of a handful of new composite hadrons, and a few claims of new physics that petered out with time and more data. I wrote a book on the CDF experiment and the science mentioned above, see at the bottom for a link to it.

Much like the Tevatron, CDF was a glorious scientific instrument, and I still feel proud of having constructed a part of it (the CMX muon system), operated it as scientific coordinator in the control room countless times day and night, and contributed to several important measurements, including the top quark search. But this post is not about me - I left the experiment in 2012, when I felt it was the decent thing to do as the other experiment I was contributing to was in direct competition on several measurements. Now I do regret a little about my past scientific integrity, as I would love to have been able to participate to the production of the new measurement of the W boson mass, which CDF published on April 7 on Science magazine and described in a highly attended seminar yesterday evening. But being an outsider allows me to have an independent look at this important result, which I will do below.

The W boson is an intriguing elementary particle. It is an electrically charged particle responsible for the transmutation of matter - the dream of alchemists for a millennium! W bosons mediate in fact the reactions that change the flavour of a quark into another. There are six kinds of quarks in nature, but ordinary nuclei mostly contain the two lightest ones, the up and down flavours. The W boson allows them to turn into one another, and in so doing, e.g., neutrons can become protons - as a down-quark in the neutron becomes an up quark, the (udd) quark content of the neutron turns into (uud), a proton's recipe. Radioactive elements do their stuff thanks mostly to this reaction.

But W bosons don't just pull that off. Their existence is at the very basis of the existence of our universe, as without the weak interaction these particles mediate, there would be no stars to look up to in the sky, no galaxies, no nuclear fusion engines providing local patches of the universe with an energy source.

The W boson was discovered (along with its neutral counterpart, the Z) in 1983 by the UA1 team led by Carlo Rubbia, who imagined and directed the experiment which, by converting a proton-only accelerator at CERN into the SppS proton-antiproton collider, allowed for collisions of sufficient energy to produce W and Z bosons, and their detection in a giant apparatus. The discovery of the W and Z particles came with a first measurement of their mass, which is the crucial attribute of elementary bodies.

We have a theory, the Standard Model (SM), which allows us to understand the phenomenology involving reactions where these particles take part, and the theory is extremely predictive: it allows us to compute with very high precision the probability that different reactions take place. The W boson mass has a very important role in the inner workings of the SM, so that by studying other reactions - ones not directly involving observable W bosons - we can predict what the W mass is, with a precision currently better than a part in ten thousand.

Endowed with such a precise prediction, we have always wanted to measure the W boson mass (and the Z boson mass, but that is another story) with as much accuracy as possible. When the Tevatron started to produce large amounts of proton-antiproton collisions (which, once in a million times, generate a W boson) the CDF and DZERO experiments cooked up increasingly precise estimates of the W mass. And in so doing they had to use all their ingenuity, as these measurements are exceedingly difficult!

Sizing it up

At hadron colliders, in order to obtain a very precise measurement you have to account for a huge number of tiny systematical effects that may bias your result. And the need to understand those effects to the tiniest level becomes stricter as you collect more data, because the statistical precision increases, forcing you to do more precise studies. You also benefit, of course, from the added data. In the end, the latest CDF measurement published the other day took a decade to pull off, and involved an unknown number of person-years of effort (I would tend to estimate it as a century worth of personpower, even leaving alone the effort in building the detector, which by itself is worth several millennia of personpower).

To give you an example of the kind of hair-splitting studies required to pull off a precise measurement of the W mass with CDF, I will mention the alignment studies that kept the researchers busy for a while. The inner tracking chamber of CDF is called COT (Central Outer Tracker). It is made by a large number of very thin wires that create an electric field in a gaseous mixture. When charged particles produced in the decay of the W boson (electrons or muons) whiz through, they ionize the gas and the resulting ions and electrons are collected by the wires, providing an electronic signal which informs of the particle position. From this, the trajectory of the particles is inferred; and from the trajectories, a measurement of their momentum is possible (because they travel a curved path in the gas, as they are subjected to a strong magnetic field).

All of the above means that if your assumed positions of the wires is wrong, the trajectories you infer are wrong, and so is the momentum. As the W mass is measured by interpreting the momentum distribution of electrons and muons, you don't want that to happen. A precise alignment procedure of the wires is possible by using control samples of data, such as cosmic rays.

Throughout its history, CDF kept collecting the signal of muon tracks coming from cosmic ray showers in the atmosphere, whenever the Tevatron was taking a break from colliding protons and antiprotons. That dataset allows to verify where the thin wires of the COT really are, because you can exploit their trajectories to determine the wire positions. The alignment procedure is painstaking work, but it pays off: after it is performed, you get to know the position of the wires to within a few micrometers. This is enough to not affect significantly the W mass measurement, but what a huge task that must have been!

If you have the time and the guts to go through the long article that CDF has published to explain in detail their result, and if you manage to understand the details, your jaw is guaranteed to drop a little every now and then. Indeed, one really understands why it took so long to measure the W boson mass - the CDF scientists knew this was their last chance, and they did a wonderful job.

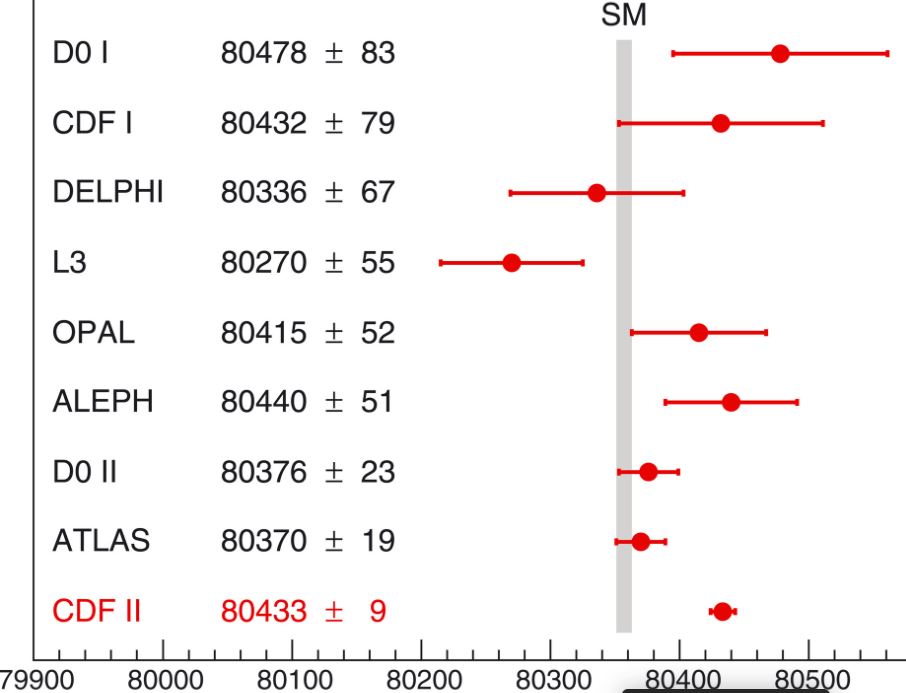

The graph above shows the quality of the CDF measurement (the point with smallest horizontal uncertainty bar at the bottom). When you compare the size of the uncertainty bar of the CDF result to those of previous experimental efforts, it is evident that CDF has outclassed everybody on the planet! On Facebook, I used a little bit of sarcasm when I got to see the graph.

See, the matter is that experimental particle physicists studying the high-energy frontier can be divided in those who love hadron collisions (such as those delivered by Rubbia's SppS, by the Tevatron, and by the LHC today) and those who claim that lepton collisions are "more pure" and more precise. The reason of the "purity" of leptonic collisions is that electrons and positrons, e.g., are point-like particles at the energy scale we test today, while protons and antiprotons are in comparisons bags of garbage. You get a lot of energy in the proton beams because protons don't lose energy by brehmsstralung radiation, but you also get collisions that produce hundreds of irrelevant particles along with those you want to study, and are much more complex to interpret.

A bit of sarcasm to spice things up

Because of the above, hadron colliders have always been perceived as "discovery machines", while lepton colliders should be "precision machines". That is in fact a fair characterization: the SppS, the Tevatron, and the LHC all discovered new fundamental elementary particles: the W, the Z, the top, the Higgs; instead, the LEP electron-positron collider measured with fantastic precision the Z boson mass.

So my Facebook sarcasm went as follows:

Where is the pun? Well, the fact is - we already had such a machine! The LEPII collider, which was constructed by adding accelerating cavities to the LEP ring, allowed the CERN machine in the nineties to produce electron-positron collisions at an energy large enough to produce pairs of W bosons. The clean production process allowed the four LEPII experiments to measure the W mass with decent accuracy... But the fun part is that ALEPH, DELPHI, OPAL and L3 never really touched ball much in the W mass precision measurement game. The final CDF measurement of the W mass proves that the paradigm about lepton colliders having a supremacy in precision measurements is a delusion.

Now the lepton collider fans will argue that LEPII never got a chance at acquiring sufficient statistics because the machine was shut down to allow LHC to be built. True, they got what they got. If LEPII was decommissioned there was a good reason - the machine would not even nearly ever have a chance to produce a scientific output on par with that which the LHC would soon deliver. So I shall rest my case. Lepton colliders are fantastic machines, but sometimes physicists can get more bang from using dirtier equipment by squeezing their brains and working hard - which is what this story is about.

[ Incidentally, in the book "Anomaly!" (see again below) I devote a full chapter to tell the story of the precision measurement of the Z mass, which in 1989 was a contended matter between lepton and hadron colliders for a while, until LEP took over thanks to its dedication to the task and indeed, the possibility to conduct a formation experiment (I will have to skip a description of how you get more precision by using the beams energy rather than a reconstruction of the particle decay here, or this post will become a book by itself). The story is very juicy, with Nobel prize laureates and lab directors playing tricks with one another and post-doctoral students virtually stabbing one another in the back. But yes, that's another story. So let's move on. ]

Is it new physics?

I already answered the question of whether in my opinion the new CDF measurement of the W boson mass, standing at seven standard deviations away from the predictions of the Standard Model, is a nail in the SM coffin. Now I will elaborate a little on part of the reasons why I have that conviction. I cannot completely spill my guts on the topic here though, as it would take too long - the discussion involves a number of factors that are heterogeneous and distant from the measurement we are discussing. Instead, let us look at the CDF result.

One thing I noticed is that the result with muons is higher than the result with electrons. This may be a fluctuation, of course (the two results are compatible within quoted uncertainties), but if for one second we neglected the muon result, we would get a much better agreement with theory: the electron W mass is measured to 80424.6+-13.2 MeV, which is some 4.5 sigmaish away from theory prediction of 80357+-6 MeV. Still quite a significant departure, but not yet an effect of unheard-of size for accidentals.

Then, another thing I notice is that CDF relied on a custom simulation for much of the phenomena involving the interaction of electrons and muons with the detector. That by itself is great work, but one wonders why not using the good old GEANT4 that all of us know and love for that purpose. It's not like they needed a fast simulation - they had the time!

A third thing I notice is that the knowledge of backgrounds accounts for a significant systematic effect - it is estimated in the paper as accounting for a potential shift of two to four MeV (but is that sampled from a Gaussian distribution or can there be fatter tails?). In fact, there is one nasty background that arises in the data when you have a decay of a Z to a pair of muons, and one muon gets lost for some reconstruction issue or by failing some quality criteria. The event, in that case, appears to be a genuine W boson decay: you see a muon, and the lack of a second leg causes an imbalance in transverse momentum that can be interpreted as the neutrino from W decay. This "one-legged-Z" background is of course accounted for in the analysis, but if it had been underestimated by even only a little bit, this would drive the W mass estimate up, as the Z has a mass larger than the W (so its muons are more energetic).

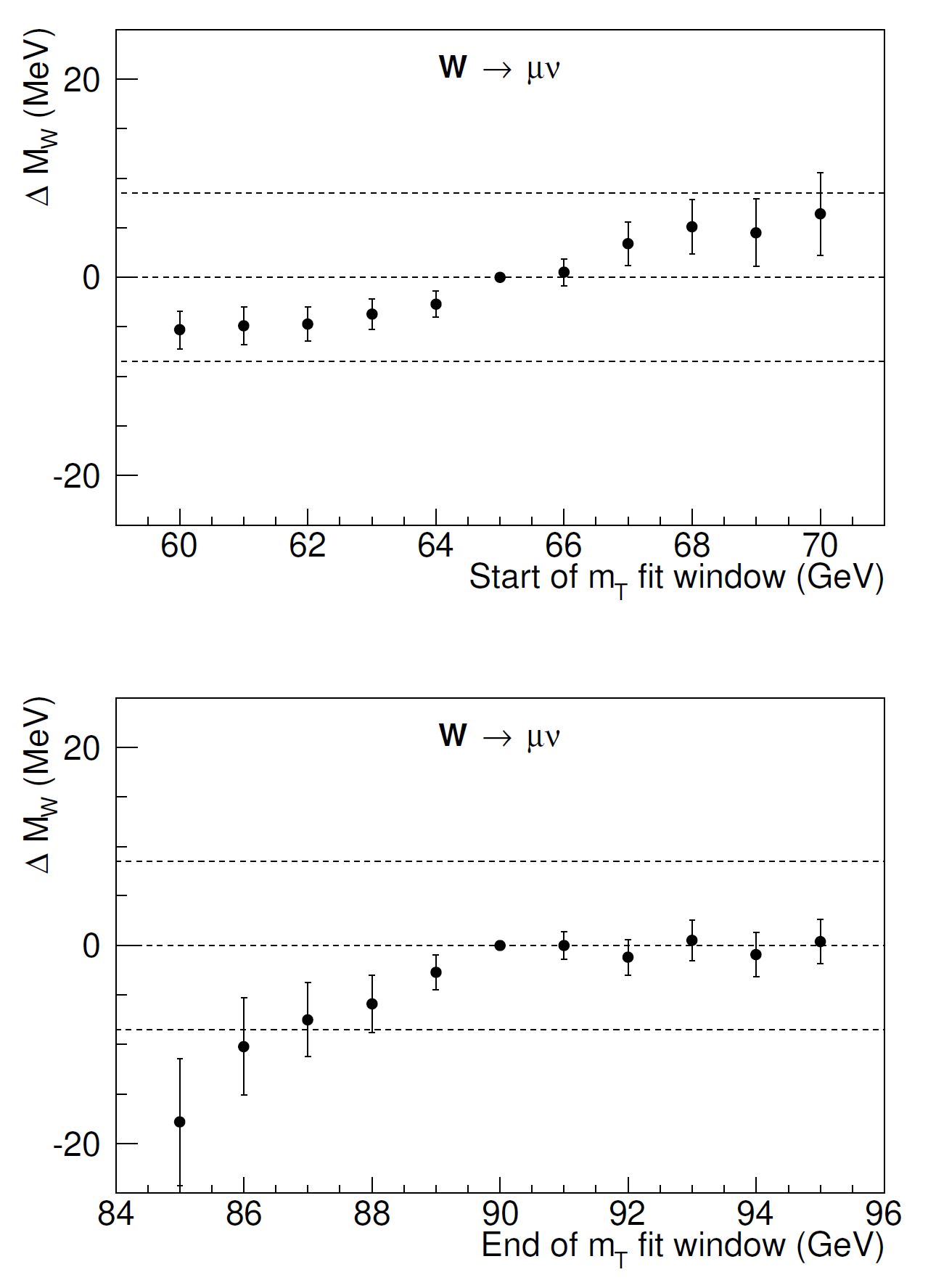

Connected to that note is the fact that CDF does show how their result can potentially shift significantly in the muon channel if they change the range of fitted transverse masses - something which you would indeed observe if you had underestimated the one-legged Z's in your data. This is shown in the two graphs below, where you see that the fitted result moves down by quite a few MeV if you change the upper and lower boundaries:

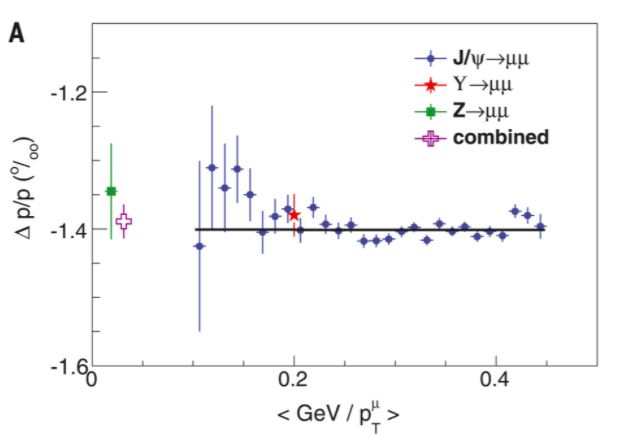

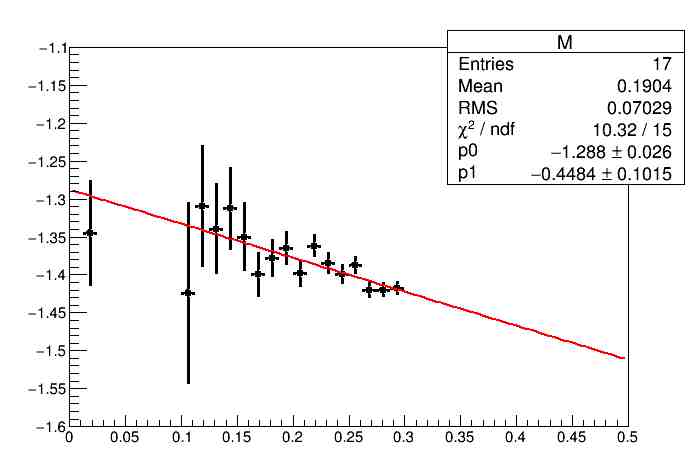

A fourth thing I notice is that the precision of the momentum scale determination, driven by studies of low-energy resonances (J/psi and Upsilon decays to muon pairs) is outstanding, but the graph that CDF shows to demonstrate it is a bit suspicious to my eyes - it is supposed to demonstrate a flat response as a function of inverse momentum, but to my eyes it in fact shows the opposite. Here is what I am talking about:

I took the liberty to take those data points and fit them with a different assumption - not a constant, but a linear slope, and not the full spectrum, but only up to inverse momenta of 0.3; and here is what I get:

Of course, nobody knows what the true model of the fitting function should be; but a Fisher F test would certainly prefer my slope fit to a constant fit. Yes, I have neglected the points above 0.3, but who on earth can tell me that all these points should line up in the same slope? So, what I conclude from my childish exercise is that the CDF calibration data points are not incompatible (but IMHO better compatible) with a slope of (-0.45+-0.1)*10^-3 GeV.

What that may mean, given that they take the calibration to be -1.4 from a constant fit, is to get the scale wrong by about a part in ten thousand at the momentum values of relevance for the W mass measurement in the muon channel. This is an effect of about 8 MeV, which I do not see accounted for in the list of systematics that CDF produced. [One caveat is that I have no idea whether the data points have correlated uncertainties among the uncertainty bars shown, which would invalidate my quick-and-dirty fit result.]

I could go on with other things I notice, and you clearly see we would not gain much. My assessment is that while this is a tremendously precise result, it is also tremendously ambitious. Taming systematic uncertainties down to effects of a part in ten thousand or less, for a subnuclear physics measurement, is a bit too much for my taste. What I am trying to say is that while we understand a great deal about elementary particles and their interaction with our detection apparatus, there is still a whole lot we don't fully understand, and many things we are assuming when we extract our measurements.

So it is a bit arrogant to produce a 10^-4 measurement and claim it is the end of the Standard Model. Mind you, I am not accusing of arrogance my colleagues in CDF! Many of them are close friends, and I have an enormous esteem of them as a whole as scientists. All I am saying is that we have to sometimes (and this is one such instance) accept to have to take a step back and throw our hands up a little.

One different way of saying the above is to cite the work done in 1975 by a Finnish phyisicist whom I had the pleasure to meet at a seminar in Helsinki, Matts Roos (really an amazing person, and an artist too). He was able to show, using published results on particle measurements, how the uncertainties had been in general underestimated, and how the residuals (differences between measurements and true values) were not Gaussian at all. See this slide from the seminar:

What does the above mean? It means that if a particle physics measurement (at least, one obtained in the seventies) is off by five standard deviations (a p-value=0.0000003 effect if the sampling distribution is a Gaussian), this might only be a p=0.0003 effect if you consider the fact that residuals of particle physics measurements tend to be non-Gaussian, but rather, a Student-10 distribution. So, just hold on with that champagne bottle, you Standard Model haters ;-)

This down in the post, where I know only my relatives will ever reach (yeah, if only), I can also say that the CDF measurement is slamming a glove of challenge on ATLAS and CMS faces. Why, they are sitting on over 20 times as much data as CDF was able to analyze, and have detectors built with a technology that is 20 years more advanced than that of CDF - and their W mass measurements are either over two times less precise (!!, the case of ATLAS), or still missing in action (CMS)? I can't tell for sure, but I bet there are heated discussions going on at the upper floors of those experiments as we speak, because this is too big a spoonful of humble pie to take on. Throw in the fact that there is a chance, at least nominally, to prove that the Standard Model is wrong, and you have the ingredients for a 180 degrees change of course in the priority list of the two giant collaborations!

And a final rant - that's my plot, for god's sake!

During yesterday's seminar I had another reason to be surprised, besides the incredibly nice result I was being treated with. The speaker, Ashutosh Kotwal, showed two graphs I had produced 22 years ago to prepare for a talk I gave in a winter conference (Moriond QCD 2000), incidentally one where I was presenting on behalf of the CDF collaboration the new W mass measurement! One of the plots is shown below, this time properly labeled:

Now, what bothers me is that these graphs are so nicely made that people have been using them, over the past 20 years, in a number of occasions - and I never got recognized as the author, damn! But I guess that if I am blogging here, as I have for 17 years now, it is because I want to give back to the community something, in exchange for the greatest gift I was ever given (except bringing me to life) - the internet!

---

Tommaso Dorigo (see his personal web page here) is an experimental particle physicist who works for the INFN and the University of Padova, and collaborates with the CMS experiment at the CERN LHC. He coordinates the MODE Collaboration, a group of physicists and computer scientists from 15 institutions in Europe and the US who aim to enable end-to-end optimization of detector design with differentiable programming. Dorigo is an editor of the journals Reviews in Physics and Physics Open. In 2016 Dorigo published the book "Anomaly! Collider Physics and the Quest for New Phenomena at Fermilab", an insider view of the sociology of big particle physics experiments. You can get a copy of the book on Amazon, or contact him to get a free pdf copy if you have limited financial means.

Comments