"The Truth Wears Off: Is There Something Wrong With The Scientific Method?"

The test of replicability, as it’s known, is the foundation of modern research. Replicability is how the community enforces itself. It’s a safeguard for the creep of subjectivity. Most of the time, scientists know what results they want, and that can influence the results they get. The premise of replicability is that the scientific community can correct for these flaws.The piece, dressed up in a bit of mysticism, is essentially a description of some well known (but too rarely acknowledged) biases in science: Unconscious selection of favorable data; the tendency to publish only positive results, and the effects of randomness.

But now all sorts of well-established, multiply confirmed findings have started to look increasingly uncertain. It’s as if our facts were losing their truth: claims that have been enshrined in textbooks are suddenly unprovable. This phenomenon doesn’t yet have an official name, but it’s occurring across a wide range of fields, from psychology to ecology.

It's an important point, one that was first taught to me in a physics class when we learned about Millikan's efforts to measure the charge of the electron - a classic case of selection bias.

Lehrer quotes some scientists in his article who suggest that this is science's dirty secret, one that researchers are ashamed of. But why should we be ashamed of this?

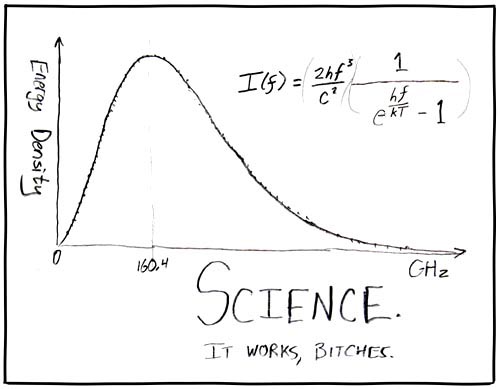

Science is a human enterprise. Mistakes get made. Biases exist. And yet, amazingly, science still works, which is really the only justification for its existence. Science is still the most powerful approach for manipulating and predicting the physical world, period. No other philosophy comes close. With all of its flaws, with science we still manage to build nuclear reactors, create glow-in-the-dark fish, find cancers using NMR, build superconducting materials, send robots to Mars, and track the spread of new flu viruses.

Given this track record, scientists have no need to be ashamed that, even in the absence of fraud, science is imperfect. Feynman puts it more eloquently:

The scientist has a lot of experience with ignorance and doubt and uncertainty, and this experience is of very great importance, I think. When a scientist doesn’t know the answer to a problem, he is ignorant. When he has a hunch as to what the result is, he is uncertain. And when he is pretty damn sure of what the result is going to be, he is still in some doubt. We have found it of paramount importance that in order to progress, we must recognize our ignorance and leave room for doubt. Scientific knowledge is a body of statements of varying degrees of certainty — some most unsure, some nearly sure, but none absolutely certain. Now, we scientists are used to this, and we take it for granted that it is perfectly consistent to be unsure, that it is possible to live and not know. But I don’t know whether everyone realizes this is true. Our freedom to doubt was born out of a struggle against authority in the early days of science. It was a very deep and strong struggle: permit us to question — to doubt — to not be sure. I think that it is important that we do not forget this struggle and thus perhaps lose what we have gained.Lehrer ends his piece with a misleading statement:

"The Value of Science," address to the National Academy of Sciences (Autumn 1955)

We like to pretend that our experiments define the truth for us. But that’s often not the case. Just because an idea is true doesn’t mean it can be proved. And just because an idea can be proved doesn’t mean it’s true. When the experiments are done, we still have to choose what to believe.The uncertainty of science doesn't mean that we're simply left with an arbitrary choice of what to believe. You still must follow the evidence, which, as Feynman points out, needs to be weighed because "scientific knowledge is a body of statements of varying degrees of certainty." (This is something sorely neglected in most science reporting that portrays each new paper as a sensational breakthough.)

A recent result, especially one supported by just a handful of studies (such as the effectiveness of a drug), gets less weight than something that has been accumulating multiple lines of evidence for decades (the mass of an electron, the common ancestry of humans and chimps). Yes, in some rapidly changing fields, some results that are later overturned end up in textbooks. Other results stand the test of time (the structure of amino acids, the genetic code).

We shouldn't be ashamed of varying degrees of uncertainty in science. I'll leave xkcd with the last word:

Comments