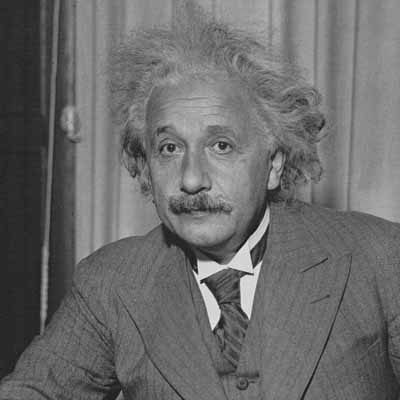

When Albert Einstein constructed his general theory of relativity he decided to resort to some reverse engineering and introduced a 'pressure' term in his equations. The value of this pressure was chosen such that it kept the general relativistic description of the universe stable against the gravitational attraction of the matter filling the universe.

Einstein never really liked this fudge factor, but it was the only way to get the equations of general relativity to describe a universe that is static in size.

More than 10 years later, Edwin Hubble's observations showed that the universe is in fact not static, but rather expanding. With this, the need for the pressure term disappeared. Einstein must have felt floored: if only he would have sticked to the bare equations without the fudge factor, he could have predicted the universe to be non-static. Einstein, since kept referring to the introduction of the pressure term as 'his biggest blunder'.

Would Einstein have lived till the very end of the 20th century, he would certainly have changed this ordeal. Sure, our universe is expanding, but since the end of the 90's we know that this expansion is accelerating. Today the universe is expanding faster than yesterday, and tomorrow it will be expanding again faster than today. Without Einstein's fudge factor, a decelerating expansion is to be expected, and the pressure term is needed to switch from a description yielding a decelerating universe to one that yields an accelerating universe.

What is causing this pressure that is pushing space apart at ever accelerating rates? Cosmologists refer to 'dark energy' permeating space as what propels this cosmic acceleration. In order to explain the observed accelerated expansion of the universe, this dark energy should comprise the vast majority of the total energy content in the universe. Recent observations lead to a 'dark energy density' in the universe corresponding roughly to one Planck energy (or equivalently: one Planck mass of about 0,00002 gram) per 1000 km cubed. The fact that this tiny density constitutes the dominating component of our universe just demonstrates the vast emptiness of space.

But what is this 'dark energy'? No one knows. The most likely explanation is that dark energy is quantum mechanical in origin. In fact, most physicists would probably agree that dark energy results from quantum fluctuations, if only this would lead to predictions of the right magnitude of the dark energy effect. However, the standard quantum field-theoretical (QFT) approach leads to an overestimate of the dark energy density. How much of an over estimate? Well, any statement one can make on this tends to be an understatement. In fact, according to standard quantum field theory, vacuum fluctuations would lead to an energy density of one Planck energy quantum per Planck length cubed. That is a Planck energy per cube with sides of 0.000 000 000 000 000 000 000 000 000 000 000 016 m. A volume a wee bit different from 1000 km cubed.

This mismatch between the theoretical and experimental values for the dark energy density is euphemistically referred to in the scientific literature as 'the problem of the cosmological constant'. Some seek the boundaries of euphemism and tongue-in-cheek refer to the mismatch as the 'fine tuning problem'. Others declare it more appropriately to be 'the biggest embarrassment in theoretical physics'.

Where have we gone wrong? Surely one would expect an error of such gigantic proportions to be easy to track down. Yet, more than a decade after the discovery of the accelerating cosmic expansion, experts are still puzzled. Far-fetched solutions such as exotic forms of energy, tachyons, dilatons, and quantum quintessence have been proposed. None of these proposals have acquired many followers.

Now, I am not a cosmologist and certainly not an expert in the field, so I am sure no one will blame me for describing here yet another potential dead alley to the long and winding road of scientific trials and errors towards the distant goal of understanding our dark universe. So let us take the liberty to follow Einstein and apply again some reverse engineering to the problem.

A simple dimensional analysis hints at a solution. There are two key length scales entering the problem: the Planck length ℓ and the diameter of the universe L. The contrast between the two is vast: 61 orders of magnitude. Wouldn't it be a huge surprise if these two extreme length scales can be combined into a volume of the right size to describe the dark energy density? Well - surprise, surprise - this is easy to achieve. The experimental value of the dark energy density happens to coincide with one Planck quantum per volume of size L2ℓ. Yet, as we saw above, standard quantum field theory predicts a zero point energy density of one Planck quantum per ℓ3. Can we change two of the ℓ's in this equation into L's?

Yes we can. Key is to realize that the ℓ3 volume enters into the theoretical description because standard QFT assumes one degree of freedom per Planck cube. So according to QFT our universe has a total of (L/ℓ)3 degrees of freedom. This however ignores the holographic nature of our universe that was postulated by Gerard 't Hooft in 1993. The holographic principle states that standard QFT vastly overestimates the number of degrees of freedom available. More precisely, the holographic principle forbids a system of linear size L to have more than (L/ℓ)2 degrees of freedom. So, this in itself already changes one ℓ in the equation for the dark energy density into an L. But there is more. QFT associates a zero-point energy of one Planck unit with each degree of freedom. In a holographic description this is unlikely to be correct. The degrees of freedom in the holographic description are non-local, and as a result the wavelengths corresponding to the zero-point motion are linked to the macroscopic length L, and not to the microscopic length ℓ. This effect (embodied in the so-called 'UV/IR connection') gives us another swap between ℓ and L in the equation for the dark energy density so that with all holographic effects incorporated we arrive at ℓ/L Planck energies per volume of size ℓ2L, or equivalently, one Planck energy quantum per volume of size L2ℓ. The dark energy density thus derived happens to be the highest density that can be achieved without risking a gigantic gravitational collapse of the whole universe.

Is this all the correct way to look at the expansion of our universe? I don't know. What I do know, is that if the above is in essence correct, holographic considerations will be an integral element of the still elusive theory of quantum gravity. It is also clear that the strict holographic cut-offs to the number of degrees of freedom and the allowed energies per degree of freedom will be of immense help to regularize this theory of quantum gravity. History tells us that experimentally demonstrated discrepancies in our understanding of the fundamental laws of physics never last for more than a few decades. So I dare to make the prediction that early this century we will witness a revolution in our thinking about the universe in the form of a fully consistent theory of quantum gravity. These are exciting times!

Comments