The issue facing "AI", which is short for Artificial Intelligence, is that the only people who will feel bound by top-down control were never going to be a problem in the first place. Large Language Models, termed Generative AI, can be a huge positive in health care, but because it is misunderstood and there is a lot of exploitative media coverage there are ongoing efforts to kill it before it really gets going.

Large Language Models are actually boring. They have long been used for creating new content of text, imagery, audio, code, and videos in response to textual instructions. Somehow OpenAI’s chatbot ChatGPT, Google’s chatbot Med-PALM, Stability AI’s imagery generator Stable Diffusion, and Microsoft’s BioGPT bot have become the targets of suppression and bans.

Certainly they can generate false and misleading information, just like they did as long ago as the 2008 presidential campaign. Who believes Twitter did not have AI manipulating it a year ago? Facebook was even published in PNAS - unethically, but PNAS somehow called it 'peer-reviewed' - experimenting with manipulating user news feeds automatically, to see how their behavior changed.

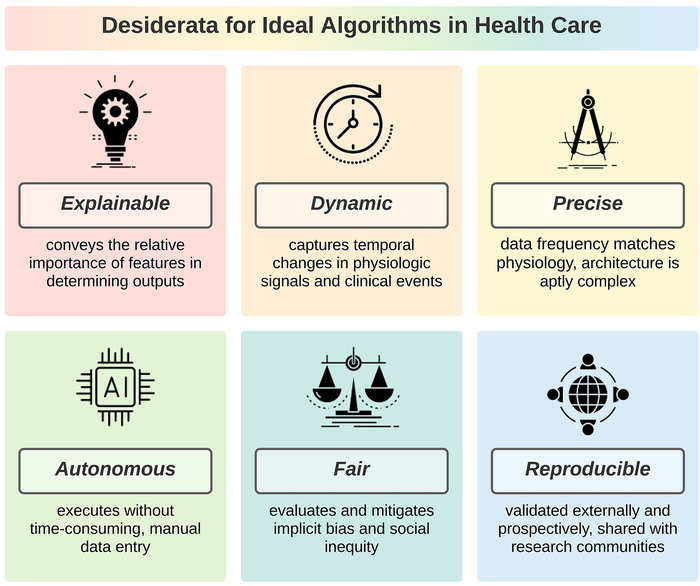

An example of a framework from 2021.

A new paper hopes to give some guidelines but the horse may have left the barn while casual pundits talk about science fiction stories containing misty appeals to Asimov's laws of robotics; which were only created in the stories to show they weren't laws at all.

Healthcare and automobiles are two areas where AI and getting humans out of a lot of it will be better for everyone. In the US, regulations, costs, and lawsuit risks in states like California are so high that doctors won't take patients who use Obamacare, so we need to maximize time that PAs, osteopaths, or whatever else people will rely on instead of MDs can spend more time doing medicine. That means getting rid of medical reports and preauthorization forms for insurance companies.

On the research side, AI has been used for decades. No one in drug discovery has been manually writing out compounds, making them, and then seeing if they work in mice but they could certainly make drug design better.

The paper by Stefan Harrer, Ph.D., of Digital Health Cooperative Research Centre in Melbourne itemizes 10 framework and I won't repost them all here - if you have 10, you already need an AI to help you get them down to 3 - but that is easy enough. A lot of problems will be solved once we go after groups who violate copyright, liability and accountability regulations to create their training data. But it will take AI to do that, and we know governments will not be able to create competent tools so that means finding private sector solutions that can. What governments can do is maintain a database of AI usage audits and create regulations - which means penalties for those who violate the policies - on how data sources and training features are used.

It can be that simple. If Asimov's "laws" were written today it would be a list 3,000 long, and therefore be useless, and that is the concern for over-regulating AI. Grifters like Snake Oil Salesman of 100 years ago will always exist, today they sell supplements and use terms like holistic, alternative, and integrative medicine but thanks to FDA those are sold to those who want to believe in magic - they are not allowed to claim they do anything that is a scientific claim, like curing cancer. And that is a sandbox for AI also. Create whatever you want if it helps someone with a virtual girlfriend feel whole, but for important applications like health care we'll all benefit from ethical rigor.

Comments